The End of Moore's Law? Or The End of an Age?

Moore's Law simply states that a computer's power doubles every 18 months.

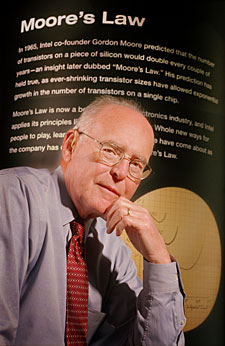

The law first coined by Intel co-founder Gordon E. Moore in a 1965 paper he published. The processing power of circuits has been exponentially growing since 1958, and that trend has continued until this very day. However, many experts now believe that this trend will finally start to decrease starting this year in 2013. We have all grown up in the silicon age that has driven every computing device that we have ever used. But the current model is starting to show signs that its time is coming. Even Intel Corporation has admitted this.

“Computer power simply cannot maintain its rapid exponential rise using standard silicon technology. ”

Gordon E. Moore in 2005

So does that mean we should go out and start panicking? Your absolutely right! What will happen when each years new cellphones, computers, tablets, and video game systems are literally the same hardware wise? No difference in performance or power. What? That is totally unheard of, and since the dawn of computers we literally have known nothing else. What incentive do we now have to go out, and buy a new one computer for example? Quite frankly there will be none. Consumer spending will grind to a halt, businesses will start to collapse, and in about 15-25 years we will be in another recession. Yikes right? Well luckily we humans are very smart, and if one thing above all else will get our inventive juices flowing, that would be necessity. Necessity is the mother of all invention, and so is the need to keep businesses going, the economy running, and food on our tables for our families.

“The problem is that a Pentium chip today has a layer almost down to 20 atoms across. When that layer gets down to about 5 atoms across, it’s all over. You have two effects. Heat—the heat generated will be so intense that the chip will melt. You can literally fry an egg on top of the chip, and the chip itself begins to disintegrate And second of all, leakage—you don’t know where the electron is anymore. The quantum theory takes over. The Heisenberg Uncertainty Principle says you don’t know where that electron is anymore, meaning it could be outside the wire, outside the Pentium chip, or inside the Pentium chip. So there is an ultimate limit set by the laws of thermal dynamics and set by the laws of quantum mechanics as to how much computing power you can do with silicon.”

Photolithography in action. How transistors are placed on a silicon wafer.

So where does that leave us? Most realistically we will probably see the tweaking of current computer models in the near future. But long term there are solutions on the horizon, such as molecular computers or the ultimate computer, a quantum computer. We are many decades away from either of these two as a viable option so it will be very interesting to see where we go, and how we solve the problem of offering consumers increasingly more powerful hardware every two years. We will bare witness to pinnacle of silicon technology within a decade or two, and then the dawn on a new technological age. For those young enough today you may actually live to see the rise of quantum computing, and maybe even see the beginning of a new technological revolution. Perhaps one day in the history books it will be called the Quantum Revolution.

- Robby Silk